Processing of text is important, especially, texts containing handwritings. These can have significant applications for the analysis of documents, such as forged signatures and anonymous letters. In this activity, image processing techniques tackled previously in the activities are used to extract different letters of a handwritten text. These are labeled to identify each letter from the others.

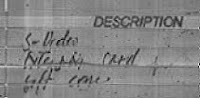

Using a cropped portion of the document with handwritten texts, filtering with some weight was done to remove lines in the text. It also enhances enhances the details of the letters.

Original

For segmenting the image, thresholding is applied to obtain the signals from the letters. This is to process each blob of the image as a letter.

Resulting binarized image

Since there are still unwanted artifacts (such as lines and dots), morphological operations were applied to clean the image. In this case, the opening, or the application of erosion and dilation morphological operations in sequence, was used.

Cleaning of image by opening operation (dilation after erosion)

Initial labeling of the letters was done to determine whether each letter was detected. This also tests whether each letter is well segmented from the background and from other letters.

Labeled image of each letter

Each letter ideally has a unique color from the other letters

To provide the basis form of the letters, thinning morphological operation was applied. The result of this shows the shape and structure of the letters, for example, of a handwritten text. The curvatures and length are some characterizations that can be made for each letter upon analysis of the results.

Thinning and labeling of the letters

The results obtained were not very satisfactory. One major problem is that the filtering of lines initially performed also removed some of the details of the letters. Some letters were even cut and no longer has the same form.

There are some morphological operations that might alleviate some of this problems. However, the basic operations applied here were insufficient, or probably, there are structuring elements that are more appropriate.

Furthermore, it is important from the start that the document image to be processed have relatively defined edges of the letters. Some blurring upon capturing the image of the document can really affect the segmentation because letters would be hard to isolate from adjacent letters. There is also a better way of providing this by using, for example, a deblurring filter (or deconvolution), that would help increase the details, producing a better resolution of the image.

There are some morphological operations that might alleviate some of this problems. However, the basic operations applied here were insufficient, or probably, there are structuring elements that are more appropriate.

Furthermore, it is important from the start that the document image to be processed have relatively defined edges of the letters. Some blurring upon capturing the image of the document can really affect the segmentation because letters would be hard to isolate from adjacent letters. There is also a better way of providing this by using, for example, a deblurring filter (or deconvolution), that would help increase the details, producing a better resolution of the image.

Cropped image document page.

Instances of the word description highlighted by the peak points of this image

Using a sample image of the word DESCRIPTION from the filtering and binarized results previously obtained, the instances of the word is highlighted in the cropped image document shown above. Correlation was applied to detect the instance of the word with the sample DESCRIPTION image as the basis template.

For this activity, I would like to give myself a grade of 9 because, even though there are significant applications of the image processing techniques previously studied, the results obtained were somewhat unacceptable. It is important, however, that the techniques studied were implemented in this activity in the proper manner (and right instance), to some extent.

I would like to acknowledge Winsome Chloe Rara, Jay Samuel Combinido, Mark Jayson Villangca and Miguel Sison for some discussions regarding this activity. I would also like to thank Dr. Maricor Soriano for the guidance, as our professor.

For this activity, I would like to give myself a grade of 9 because, even though there are significant applications of the image processing techniques previously studied, the results obtained were somewhat unacceptable. It is important, however, that the techniques studied were implemented in this activity in the proper manner (and right instance), to some extent.

I would like to acknowledge Winsome Chloe Rara, Jay Samuel Combinido, Mark Jayson Villangca and Miguel Sison for some discussions regarding this activity. I would also like to thank Dr. Maricor Soriano for the guidance, as our professor.