Some patterns were generated using Scilab and the corresponding (2D) Fourier transforms are shown above. (Patterns are symmetric).

Code for generating patterns:

Annulus:

x = [-64:1:64];

[X,Y] = meshgrid(x);

r = sqrt(X.^2 + Y.^2);

annulus = zeros(size(X,1), size(X,2));

annulus(find (r <=32)) = 1.0; annulus(find(r<=16)) = 0.0; Square: sq = zeros(129, 129); temp = ones(33, 33); sq(49:81, 49:81) = temp; Square Annulus: sqannulus = sq; temp2 = zeros(17, 17); sqannulus(57:73, 57:73) = temp2; Double Slit: slit = zeros(129, 129); slit(:, 55) = 1.0; slit(:, 75) = 1.0; Two Dots: dot = zeros(129, 129); dot( 65, 55) = 1.0; dot( 65, 75) = 1.0;

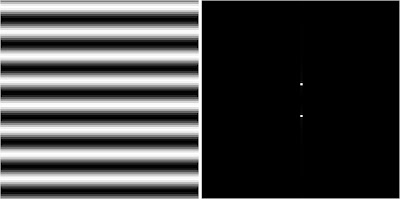

In this activity, the anamorphic property of the Fourier transform is analyzed. First, a 2D sinusoid (similar to a corrugated roof) is created. To examine the spatial frequencies of this image, the Fourier transform was obtained. Furthermore, the frequency of the sinusoid was varied (f = 4, 8 and 16). The results are shown below.

f=16

f=162D sinusoids (left) with the specified frequencies

and their corresponding Fourier transforms (right)

Scilab code for generating 2D sinusoids and displaying the Fourier transforms (modulus):

nx = 100; ny = 100;

x = linspace(-1,1,nx);

y = linspace(-1,1,ny);

[X,Y] = ndgrid(x,y);

f = 4 ; //for varying frequency

sinusoid = sin(2*%pi*f*X); //the 2D sinusoid

fft_sinusoid = fft2(z); //obtain Fourier transform

imshow(abs(fftshift(fft_sinusoid)),[]); //display modulus of the transform

(As a comparison, the unbiased (left) and its Fourier transform (bottom) is placed side by side with the biased (right) and its corresponding transform. Notice that the unbiased and biased 2D sinusoids are identical. This is the limitation of the imshow and imwrite functions because it requires normalized images, and therefore, also altering the information contained in the images because no negative values can be shown or written as an image.)

2D sinusoids without (left) and with (right) bias

2D sinusoids without (left) and with (right) bias Fourier transforms of the unbiased (left) and biased (right).

Fourier transforms of the unbiased (left) and biased (right).If a non-constant bias is added it would be hard to determine the adjustment needed. However, for example, if low-frequency sinusoids are added to the interferogram, it can simply be subtracted from the Fourier transform. The center of the transform of the 2D image represents the low frequencies, and this can simply be removed. If the frequency of the unwanted signals added are known, it is much easier because it can easily just be subtracted. But for example, for noise, which is of unknown frequency, it is difficult. Usually, these are of low frequencies so the previous suggestion might work.

2D rotated sinusoid (left) and the corresponding Fourier transform (right)

2D rotated sinusoid (left) and the corresponding Fourier transform (right)nx = 100; ny = 100;

x = linspace(-1,1,nx);

y = linspace(-1,1,ny);

[X,Y] = ndgrid(x,y);

f = 4; //frequency of sinusoid

theta = 30; //angle of rotation in radians (+ for counterclockwise)

z = sin(2*%pi*f*(Y*sin(theta) + X*cos(theta))); //generate rotated sinusoid

It is also interesting to look at a combination (multiplication) of different sinusoids in X and Y. An easier way to visualize this is by providing a combination of different sinusoids in succession as presented below.

Combination of the previous sinusoid with a sinusoid in X with f = 8 (left)

Combination of the previous sinusoid with a sinusoid in X with f = 8 (left)and the corresponding Fourier transform (right)

Combination of the previous sinusoid with a sinusoid in Y with f = 8 (left)

Combination of the previous sinusoid with a sinusoid in Y with f = 8 (left)and the corresponding Fourier transform (right)

Scilab code for generating the combination of sinusoids in X and Y:

nx = 100; ny = 100;

x = linspace(-1,1,nx);

y = linspace(-1,1,ny);

[X,Y] = ndgrid(x,y);

z = sin(2*%pi*4*X); //sinusoid in X with f = 4

z1 = sin(2*%pi*4*Y); //sinusoid in Y with f = 4

z2 =sin(2*%pi*4*X).* sin(2*%pi*4*Y); //combination of sinusoid in X and Y with f = 4.

//z and z1 are simply multiply element by element

z3 = z2.*sin(2*%pi*8*X); //combination of sinusoid in X and Y with f =4 and sinusoid in X with f =8.

z4 = z3.*sin(2*%pi*8*Y); //combination of sinusoid in X and Y with f =4 and sinusoid in X and Y with f =8.

One important property of Fourier transform is linearity. This can be demonstrated by having an image that is a sum of different sinusoid signals. Intuitively, a linear transformation acting on a sum can be distributed into a sum of the transforms of each added terms. So for example, if the combination of X and Y sinusoids is added with a rotated sinusoid (X sinusoid rotated by 30 radians), the transform of the sum is basically the sum of the transform of the X and Y sinusoids and the transform of the rotated sinusoid. Indeed, this is verified by the results below.

Combination of sinusoids in X and Y with f = 4 (left)

Combination of sinusoids in X and Y with f = 4 (left)and the corresponding Fourier transform (right)

Rotated sinusoid in X (angle of rotation = 30 radians) with f = 4 (left)

Rotated sinusoid in X (angle of rotation = 30 radians) with f = 4 (left)and the corresponding Fourier transform (right)

Sum of the two previous sinusoid function images (left)

Sum of the two previous sinusoid function images (left)and the corresponding Fourier transform (right).

The transform, indeed, looks like the addition of the Fourier transform of the two previous sinusoid function images.

I would also like to acknowledge Winsome Chloe Rara for her help in doing this activity and the guidance of our professor Dr. Maricor Soriano.